Configure Custom Trigger Runs- dbFlux (3/9)

published on

Hi guys this is the third article in my nine part series for the key features of dbFLux. The last one was about creating database objects. This article is about Custom Trigger Runs or how to automatically create TableAPIs with dbFlux.

In our example I use the three schema model, which separates the data, the business logic and the application logic. In such an architecture it makes sense to provide access to the data only via interfaces. For this purpose I use a Table API and an Access API.

To automate the creation of these packages, which are mainly based on tables, dbFlux offers so called Custom Trigger Runs. In short: With dbFLux you can define which additional files should be executed when you compile a file with the command dbFlux: Compile Current File.

You have probably wondered what these .hook folders are for. They are part of smartFS, our standard for Oracle database projects. These .hook folders play a major role later on, during the deployment. Here you have the possibility to place scripts, which will be called depending on the deployment mode and time. dbFLux can use these folders by the use of the Custom Trigger Runs too. In my example I place a file generate_table_api.sql in the directory todo_data/.hooks/post. I put another file in the directory todo_logic/.hooks/pre. With the first file we create a TableAPI in the DATA schema and with the second one an API that makes the TableAPI of the DATA schema accessible for the Logic layer.

To use the Custom Trigger Runs you have to configure them. This is of course done in the Workspace Settings.

"dbFlux.customTriggerRuns": [

{

"triggeringExpression": "db\/todo_data\/(tables|tables/tables_ddl)\/.+\\.sql",

"runFile": "db/todo_data/.hooks/post/generate_table_api.sql",

"runFileParameters": ["dev", "dev", "${fileBasename}"]

},

{

"triggeringExpression": "db\/todo_data\/(tables|tables/tables_ddl)\/.+\\.sql",

"runFile": "db/todo_logic/.hooks/pre/010_generate_access_api.sql",

"runFileParameters": ["dev", "dev", "${fileBasename}"]

}

],

As you can see, whenever a file from the tables or tables/tables_ddl directory is "compiled" with dbFlux, the defined files are automatically executed afterwards. And this independently of the actual database connection of the source file. dbFlux makes use here also of the possibility of VSCode existing runtime variables, as for example the current opened file, as parameter to such a file to pass. Especially when generating a TabelAPI this definitely makes sense, you don't always want to regenerate all APIs for the whole schema. In my case this is the third variable. The other two are not used by dbFlux. They will be used later during deployment. But that will be another blog post series.

Such a hook-script is a normal SQL script, which defines the expected parameters in the top section. Something like this:

set define '^'

set concat on

set concat .

set verify off

set serveroutput on

set linesize 2000

set wrap off

set trimspool on

set termout off

column 1 new_value 1

column 2 new_value 2

column 3 new_value 3

select '' "1" from dual where rownum = 0;

select '' "2" from dual where rownum = 0;

select '' "3" from dual where rownum = 0;

define _parameter_01 = ^1 "0.0.0"

define _parameter_02 = ^2 "undefined"

define _parameter_03 = ^3 "ALL_TABLES"

define VERSION = ^_parameter_01

define MODE = ^_parameter_02

define ALL_TABLES = ^_parameter_03

set termout on

prompt ********************************************************************

prompt * VERSION <^VERSION>

prompt * MODE <^MODE>

prompt * ALL_TABLES <^ALL_TABLES>

prompt ********************************************************************

prompt ...With the command dbFlux: Compile current File or the shortcut Ctrl+Alt+B the table script can now be executed. After that dbFlux will execute the two API scripts.

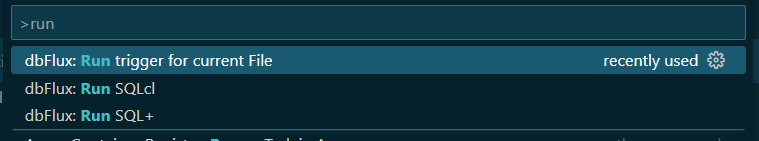

With the command dbFlux: Run trigger for current File you can execute just the Custom Trigger Runs for the current file.

In the next post I will talk about how dbFLux can support you when you want to make changes to tables.

List of the single articles of this series:

1. Initialize Workspace

2. Create Database Objects

3. Configure Custom Trigger Runs